Yesterday, as many of you were making the Home Alone face at a WTF tweetstorm from conspiracy theorist/actress Roseanne Barr, a Tesla Model S plowed into a parked police car in California:

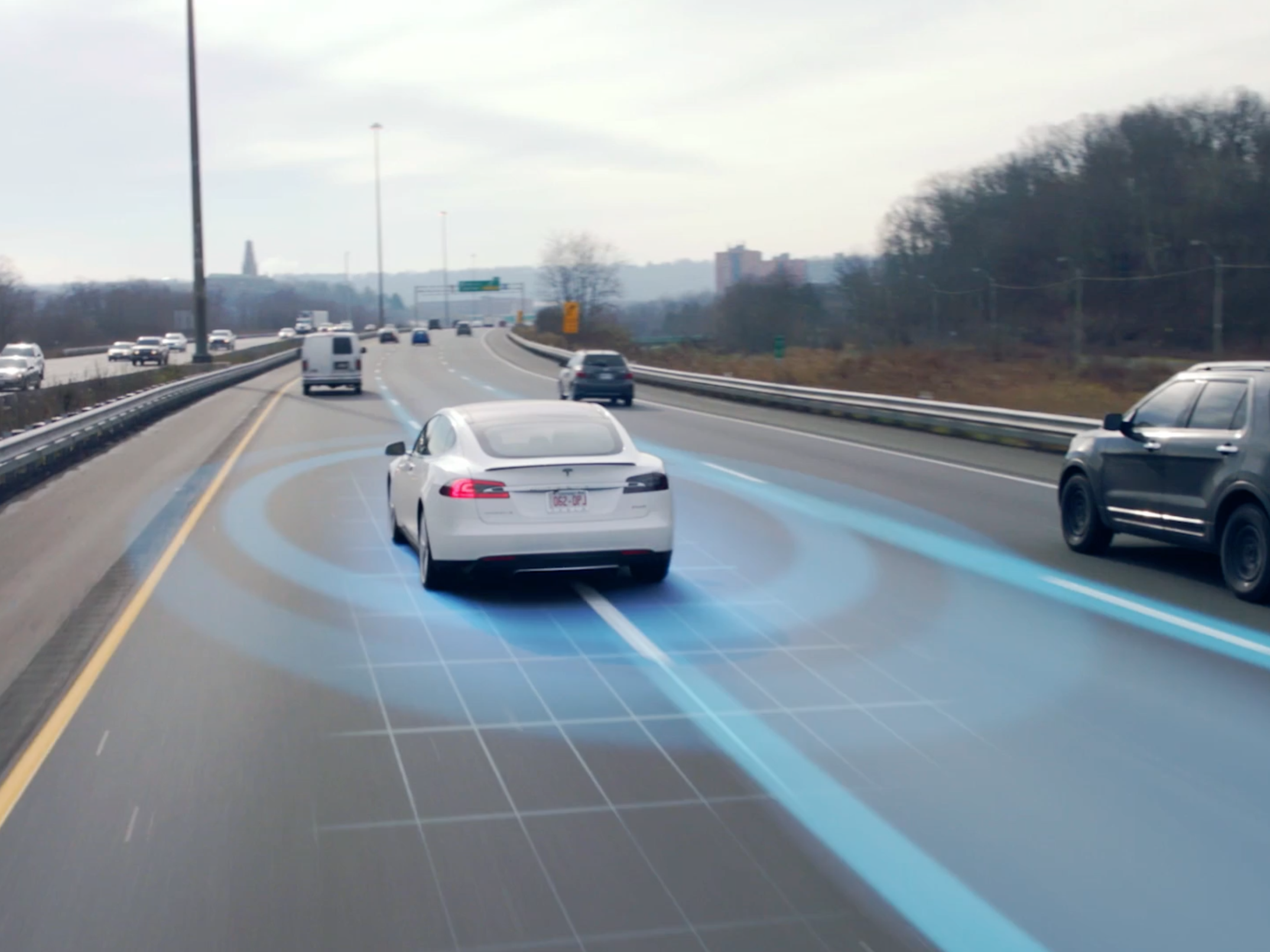

The driver, a 65-year-old from Laguna Niguel, Calif., told officers that he had engaged the car’s partial self-driving system, called Autopilot. “He told us in his own statement he was in driver-assisted mode,” police Sgt. Jim Cota said.

The driver suffered minor injuries, Cota said. The parked cruiser was unoccupied, the officer standing about 100 feet away off Laguna Canyon Road as he responded to a call.

Cota said the luxury electric car crashed in almost the same place as another Tesla about a year ago. The driver, he said, also pointed to the Autopilot system as being engaged.

We’ve lost count of the number of crashes involving Autopilot in recent months (or in this case, allegedly involving Autopilot), but it certainly seems as though there are more of them. So, as Socrates once said: what’s up with that?

As I see it, there are five possibilities.

1. It could simply be because there are more Teslas on the road these days. As the number of semi-self-driving cars increases, so are the chances that they’ll be involved in accidents. No way around those odds, really.

2. It could also be that, as we begin the long, slow transition to self-driving vehicles, we’re hyper-sensitive to the potential for that technology to fail. Every incident involving Autopilot and similar software will receive greater scrutiny, until we finally get comfortable with autonomous cars and realize, “Hey, they’re not perfect, but they’re a hell of a lot better than Bob.”

3. It could also be that Tesla is quietly overselling Autopilot to consumers, causing drivers to ignore the software’s limitations and treat their vehicles as fully autonomous when they’re not. (This approach to Autopilot caused headaches for Tesla in China and has been linked to at least one fatal collision.)

4. Maybe Tesla isn’t overselling Autopilot, and drivers are just being careless. Stupid is as stupid does.

5. Or maybe there hasn’t been an increase in reports of collisions at all, and I’ve been drunk for the past year or so.

Tesla can’t do much about #1 or #2 (or #5 for that matter). However, it can address items #3 and #4. Either the company needs to roll out its highly anticipated, full-autonomous version of Autopilot that’s been in development for years, or it needs to build in more safeguards to ensure that drivers don’t misuse Autopilot, putting themselves and everyone else on the road at risk. Honestly, this isn’t rocket science–we can leave that to SpaceX.